From cocktail parties to public transit, there are competing sound sources in many everyday environments. If you want to listen to one specific sound, say a friend’s question, in a complex auditory setting, you have to distinguish between the sounds around you and focus on the one of interest. This situation is known as “the cocktail party problem”. Understanding how humans solve this problem can lead to advancements in hearing aid designs.

Understanding the Cocktail Party Problem

Imagine that you’re at a busy cocktail party on New Year’s Eve. Music and laughter fill the air in a cacophony of sound. You and a friend are chatting in the middle of the crowd, waiting in anticipation for the countdown to begin.

Now, close your eyes and think about trying to listen to your friend.

How did you pick your friend’s voice out from the mixture of noises around you?

The answer to this question lies within the cocktail party effect, a concept popularized by Colin Cherry in 1953. The cocktail party problem involves hearing and focusing on a sound of interest, like a speech signal, in an environment with competing sounds.

To do so, you need to overcome two challenges:

- Analyzing a mixture of sounds and picking out the particular sound of interest so that you can understand it

- Directing your attention to the sound of interest while ignoring other sounds

- This can involve shifting your focus when listening to two conversations

These challenges are exacerbated when the party becomes larger and there are more competing sound sources. As a result, it is difficult to determine the speech signal of interest, recover it from the blending of sounds around you, and then pay attention to it. Despite the challenge, many people are able to naturally solve this problem without thinking much about it.

So how do we do it? Let’s take a look…

How Does the Human Body Solve the Cocktail Party Problem?

According to this source, a main element at play here is that our brains are able to use grouping cues to determine which sounds go together. For instance, individual sounds often have common amplitude changes across their different frequencies. This means that when we come across sounds at multiple frequencies that stop and start at the same time, our brains interpret these as belonging to the same sound source. Additionally, when frequencies in a sound mix have a harmonic relationship, they are often heard as one sound, since it is likely that they are related to one another.

Fluctuations in natural sounds also make it easier to differentiate between the sounds. Although different sounds can obscure each other at times, when they fluctuate, we get a glimpse of the underlying sounds in the noisy environment. Our auditory system can then fill in the blanks for the obscured sounds by accurately grouping the obscured bits.

Press play to be transported to a noisy cocktail party. At first, you can only hear a melange of sound. Then, you run into an old friend, who starts talking to you. As you focus on what your friend is saying, you are eventually able to filter out the other sounds of the party, effectively turning them into background noise.

Another helpful way our brains solve this problem is by using our understanding of various classes of sounds. Going back to our cocktail party example, if your friend is speaking, you’ll have a better chance of hearing them if they are forming coherent sentences than if they are speaking gibberish. In addition, your perception of sound is more accurate if your friend has an accent that is familiar to you.

Localization and visual cues also help us direct our attention to the correct auditory source. If a target sound is in a different location than undesired sounds, for example, we can more easily differentiate it using our spatial hearing, and as a result, the rest becomes background noise.

Challenges in Solving the Cocktail Party Problem

While the average human body is typically able to solve the cocktail party problem on its own, people with impaired hearing may struggle in loud situations. To learn more, we reached out to Abigail Kressner of the Technical University of Denmark. Kressner mentions that one generally accepted theory on why hearing-impaired people struggle in loud situations is that it is due to a “combination of audibility (i.e., whether the signals are loud enough for the hearing-impaired person to hear them) and reduced temporal resolution.”

Kressner elaborates by saying that these issues may “influence a hearing-impaired listener’s ability to segregate different streams of sound within a complex acoustic scene like a cocktail party and that they also may have reduced attentional segregation.” Those who are hearing impaired are also less able to “listen in the dips” between fluctuations of competing noise sources. As we touched on earlier, these fluctuations in the noise provide glimpses of the target speech sound for those with normal hearing, and therefore, they provide clues for understanding the speech. Replicating this ability in machine algorithms for hearing aids is a challenge for hearing aid designers.

Designing Superior Hearing Aids That Account for the Cocktail Party Effect

The first objective in designing hearing aids is, of course, to make sounds audible for hearing-aid users. But after meeting that requirement, there are a great deal of additional features that can be added, including:

- Directional microphones or beamformers to deemphasize sounds coming from a certain angle, such as behind the user

- Computational speech segregation systems to automatically differentiate between target speech and interfering background noise and to facilitate the suppression of the background noise

Kressner notes that these approaches both encounter the challenge of distinguishing between sound signals and finding the one the listener wants to hear. For instance, you may want to listen to a friend talking in front of you or someone on the other side of the room who has just called your name.

A hearing aid. Image by Udo Schröter — Own work. Licensed under CC BY-SA 3.0, via Wikimedia Commons.

How will the hearing aid device know which signal the user wants to listen to? The COCOHA project thinks brain signals (EEG signals) are the answer. This solution still has a lot of work ahead of it, though, including more research into decoding cognitive attention and then using this information to adjust the device and suppress unwanted signals.

Finding Inspiration for Designing Better Hearing Aids

Let’s move away from our imaginary cocktail party and instead take a walk through a dense forest. Here, on warm spring evenings, you may hear a chorus of Cope’s gray treefrogs. While each individual call is similar, fitter males give off faster and longer calls. The females listen for these calls, tuning out extra noises and tuning in to the calls of interest. Research into how these frogs achieve this feat and the difference between their ears and human ears could assist in improving the design of both hearing aids and speech recognition systems.

Finding inspiration for improving hearing aid designs in nature; a photo of a Cope’s gray treefrog. Image by Fredlyfish4 — Own work. Licensed under CC BY-SA 4.0, via Wikimedia Commons.

So far, a lot of research into designing hearing aids that account for the cocktail party problem “has been acquired via very controlled, yet unrealistic laboratory experiments,” Kressner notes. This isn’t ideal, because there is “a disconnect between what we see in the laboratory and what we see in the real world.” To move forward and close this gap, Kressner suggests that it could be possible to use, for example, numerical modeling or more realistic psychoacoustic reproduction techniques to better understand what is happening in the real world.

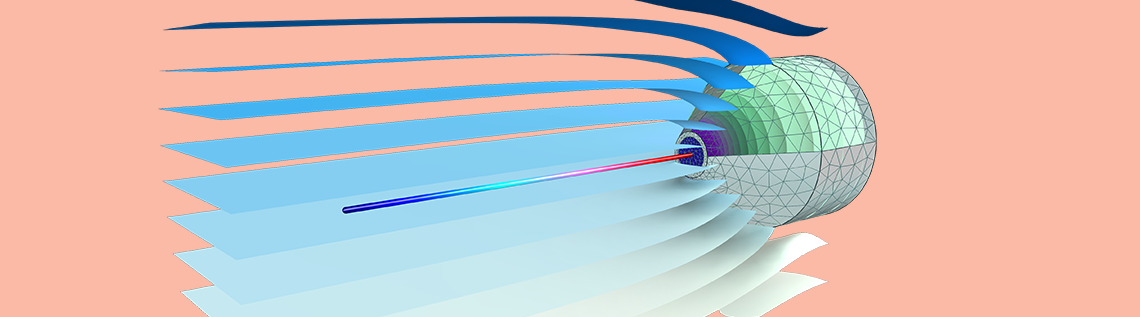

Finding inspiration in simulation; a probe tube microphone, which can be used in association with hearing aids, simulated with the COMSOL Multiphysics® software.

Learn More About the Cocktail Party Problem and Acoustics

- Read about recent research into the cocktail party problem in this Current Biology article

- A.W Bronkhorst, “The Cocktail Party Phenomenon: A Review of Research on Speech Intelligibility in Multiple-Talker Conditions”, Acta Acustica united with Acustica, 2000.

- Analyzing Hearing Aid Receivers with Lumped-Parameter Modeling

- Analyzing a Probe Tube Microphone Design with Acoustics Simulation

Comments (0)